🌆 Your guide to AI: July 2023

Hi all!

Welcome to the latest issue of your guide to AI, an editorialized newsletter covering key developments in AI policy, research, industry, and startups during June 2023. Before we kick off, a couple of news items from us :-)

Last week, we hosted our 7th annual Research and Applied AI Summit in London, with an incredible line-up of speakers from industry and academia. Videos will start appearing on the RAAIS YouTube channel in the coming days. Subscribe to get notified!

To mark the Tony Blair Institute for Global Change’s new report A New National Purpose: AI Promises a World-Leading Future, Nathan participated in a panel on AI leadership, with Darren Jones, the Labour MP and Chair of the Business and Trade Select Committee, and Benedict Macon-Cooney, the Chief Policy Strategist at the TBI. You can watch it back here or read Nathan’s notes here.

Our friends and fellow newsletter writers at Form Ventures featured Nathan in their Playbook newsletter on the AI investing opportunity and the role of policymakers.

Nathan joined James from Cerebras to discuss the state of AI as of last week!

As usual, we love hearing what you’re up to and what’s on your mind, just hit reply or forward to your friends :-)

🌎 The geopolitics of AI

In late August 2022, NVIDIA’s A100 and H100 – their most powerful chips for AI applications – were added to the US Commerce Department’s export control list and out of reach for Chinese companies. The company later announced that it was designing the A800 and H800 chips to be just below the performance threshold set by the US ban. Droves of these chips have already been purchased by Chinese behemoths like Tencent and Alibaba. Chinese giants also resorted to renting compute on the cloud and traffickers were of course already breaking them. A great article from Reuters reports conversations with vendors in the Huaqiangbei electronics area in Shenzhen who could supply customers with A100 chips. “They told us straight up that there will be no warranty or support.” The customers for the chips were not limited to companies wanting to run AI models, but also collateral victims of the AI chip ban like app developers and gamers. The traffic allegedly benefits the local authorities as well. The CEO of Beijing-based Megvii, the maker of the most used face recognition software in the world, estimated that there were around 40,000 A100 GPUs in China. And according to NVIDIA’s CFO, China historically accounted for 20-25% of Nvidia’s revenue from data center-related products. Still, the more advanced H100s are apparently out of reach. More generally, while small quantities of A100 chips are relatively easy to find, exports of larger batches are apparently under control. In this context, according to the Chinese website Late Post, ByteDance has ordered more than $1B worth of GPUs from NVIDIA, probably (hopefully…) from the A800/H800 variety.

They did well to fill up on GPUs. Things are apparently going to get worse, maybe as soon as this summer. According to the Wall Street Journal, the Biden administration is contemplating banning the sale of even A800 chips without a license as part of an update of the export controls. It sure takes a whole lot of GPUs to build a chatbot as good as ChatGPT, but Baidu apparently has enough, as it recently claimed that its chatbot Ernie 3.5 was superior to GPT-3.5 on two LLM benchmarks, AGIEval (exam-heavy, designed by Microsoft) and C-Eval (Chinese-focused, designed by Shanghai Jiao Tong University, Tsinghua, and the University of Edinburgh) and even outperformed GPT-4 on Chinese-language capabilities. Baidu has been the Chinese industry’s leader in LLM development, and it is now hoping to reap the rewards of years of research. At least it might not have to fear foreign competition even in Hong Kong, as Microsoft/OpenAI and Google are limiting access to their chatbots, probably out of fear of seeing the chatbots generate content that violates Hong Kong’s strict new national security law.

All the current demand and expected growth of AI use has fueled NVIDIA’s valuation during the past few weeks. Who’s to compete with them and to benefit from the supply crunch? AMD comes to mind, but their software lags far behind NVIDIA’s. Last month we covered how George Hotz founded a new company (tinygrad) to tackle this problem, only to then throw in the towel two weeks later because AMD’s software was just too fundamentally bad. To double down on a glaring improvement opportunity, AMD announced two partnerships with Pytorch and Huggingface. The first aims at making Pytorch immediately compatible with ROCm (AMD’s CUDA) and running training jobs on AMD’s latest MI300 GPU. The second enters AMD in HuggingFace’s Hardware Partner Program, where developers from both companies will work to make models from the HuggingFace hub run on AMD’s chips. AMD’s latest chips promise to be individually incredibly good, but they will probably lag behind NVIDIA’s in multi-server settings. More importantly, the partnerships, or tinygrad, won’t solve AMD’s software woes any time soon. So NVIDIA still gets some slack. Read the very instructive semianalysis blog posts on this here and here.

The European parliament approved the draft of the AI act this month. We have covered all the details of the AI act over past newsletters and State of AI reports (see Politics sections 2021, 2022). But the AI act has been constantly updated since, with rules specifically designed to regulate the use of foundational models. Nice work from Stanford’s Center for Research on Foundation Models graded the foundation model provider’s according to their compliance with the Draft EU AI Act. They used the 12 draft AI criteria that could be measured using public information and graded the providers on each on a scale of 0 to 4. The criteria included the use of copyrighted data (on which all but HuggingFace/BigScience’s BLOOM (3) and GPT-NeoX (4) scored 0), energy consumption, documentation, and risk mitigation. BLOOM came out on top with a score of 36/48, while Anthropic’s Claude was last with 7/48. But more than the scores, the researchers highlighted that a compliant foundation model isn’t totally out of reach, as taking the pointwise maximum between BLOOM and GPT-4 over each criterion resulted in a 42 score, i.e. satisfying 90% of the criteria. The achievability of compliance has not, however, stopped a range of prominent EU companies warning that the Act could have a serious impact on competitiveness and technological sovereignty. A letter signed by 150, including European champions like Heineken, Siemens, and Renault, warned that the AI Act risked making compliance prohibitively expensive, and instead advocated a risk-based approach that sounded eerily similar to the one being pursued across the Channel.

While the EU took its AI Act victory lap, we have seen the UK attempt to get back on the front foot. Firstly, the UK announced plans to host a major summit for “like-minded” nations (read: not Russia or China) on the future of AI. The agenda for this summit remains unclear, but it seems as though the government is attempting to revive its traditional position as a “bridge” between divergent EU and US regulatory regimes. Alongside its global aspirations, the government made a number of domestic changes. We’re seeing the start of a welcome, if overdue, institutional shake-up. The long-standing AI Council is likely to be phased out, while State of AI co-author Ian Hogarth will be leading up a new taskforce focused on foundation models. The AI Council, much like the Alan Turing Institute (currently in the crosshairs of the Tony Blair Institute), was heavy on industry grandees but lighter when it came to following frontier AI research.

It remains to be seen, however, how the UK’s aspirations around global leadership will shape up. The Prime Minister revealed his “safe strategy for AI” during London Tech Week, which included encouraging steps around safety, such as a commitment from DeepMind, OpenAI, and Anthropic to provide early access to frontier models for AI safety research. However, the commitments about using AI to overhaul public service delivery remained vague. For example, a new £21 million fund to allow hospitals to fund the development of AI tools sounds positive in theory, but it doesn’t fix the very real structural problems that make it hard to digitize healthcare. Currently innovators have no one gateway to attempt to sell new tools into the NHS. That means, if you’re a start-up, you have to tour individual hospital trusts in the hope of winning small contracts in challenging procurement processes. That doesn’t even cover the poor quality of data and outdated technology you have to work with if you do win. AI might be necessary to improve public services, but it won’t be sufficient.

🍪 Hardware (kinda)

Wayve released videos of GAIA-1 in action. GAIA-1 is a generative video model they trained to predict the next video frame from hours of recorded driving. The model is capable of generating several minutes of video from a few seconds while still being very coherent. Notably, the generated videos can be conditioned on the actions of all the parties involved in the driving scene, from the ego-vehicle to other vehicles, pedestrians, etc. Canadian self-driving vehicles startup Waabi presented their simulation tool for making the training of the vehicles scalable and controllable. Their sensor simulator, dubbed Unisim, “takes a single recorded log captured by a sensor-equipped vehicle and converts it into a realistic closed-loop multi-sensor simulation”. In other words, given a situation, UniSim can generate different controllable scenario along with the corresponding sensor logs, even adapting to different vehicles (for example the elevated seat of a truck). They also have a CVPR paper you can read. Meanwhile, it seems that officials are being rather heavy-handed (or downright malicious) when dealing with self-driving cars malfunctions. The California state is accusing San Francisco officials of data manipulation, a Reuters reporter writes. “In the 4 @Waymo traffic collisions SF cited to prove driverless cars are less safe, 3 were the Waymos being rear-ended and 1 didn't even involve any cars touching, CA says.”

But will any of this matter? Tesla shared a few snippets of its own model-generated video. Tesla owns the largest fleet of vehicles equipped with cameras that record millions of hours of training data. And now it is seriously ramping up the compute available to train and deploy their models. It said it will start producing its Dojo supercomputer in 2023 based on the D1 chip it designed. By 2024 the total amount of compute available will exceed 100 Exa-Flops, or equivalently what 300k A100 GPUs can handle. Note that Dojo is specifically designed to handle images and would struggle training LLMs. With such a massive compute and real-world dataset diversity edge, the self-driving cars battle seems to be Tesla's to lose.

🏭 Big tech startups

Don’t be surprised if this section sounds like a funding/exists highlight reel: The biggest news this month came from young startups building LLMs and getting massive funding to do so. UC Berkeley/Apache Spark spinout Databricks acquired MosaicML for $1.3B only 2 years after it was founded. Mosaic had raised $37M up to this point. The startup was born with the goal of helping companies train their own generative AI models trained on their own data, and recently started offering inference services as well. But it is probably better known for impressive engineering feats like training Stable Diffusion from scratch for less than $50K, or building state of the art fully open-source LLMs with long context length. More generally, judging by the time separating publication of research on language models and the publication of MosaicML’s implementations, their speed of execution is phenomenal. And that translates into $20M in annual recurring revenue. For a 2 year-old startup, that’s not too bad.

Databricks’ heating battle with Snowflake, which recently acquired LM-powered search startup Neeva, has been commented on in length, see for e.g. here, here, and here. The most relevant point here is a recently announced partnership between NVIDIA and Snowflake. Snowflake will start using the NVIDIA NeMo platform (covered in the April’23 Guide to AI) in order to help companies train their models on their own data. When your data is sitting somewhere, it’s convenient to keep it where it is to train your models. The latest Snowflake and Databricks moves confirm customer appetite for both customization of their models and full integration of their machine learning stack.

If you thought a two-year old software company sold for $1.3B was spicy, hold on to your seat. Inflection AI, the 35-person startup led by ex-DeepMind cofounder Mustafa Suleyman, raised a $1.3B round at a $4B valuation led by Microsoft (remember that Microsoft will eventually hold a 49% stake in OpenAI, an Inflection competitor) and NVIDIA. LinkedIn founder and Greylock partner Reid Hoffman (Mustafa Suleyman is a venture partner at Greylock), Bill Gates, and ex-Google CEO Eric Schmidt participated in the round. But the fundraise itself isn’t the biggest news here: Inflection is using that money to build what would be the largest AI cluster in the world with 22k H100 GPUs NVIDIA on CoreWeave. Assuming a 3-year lease, this cluster will cost close to $1B, undoubtedly implying a “round trip” from its cloud investors to Inflection and back. Part of it – 3,500 NVIDIA H100 GPUs – led the MLPerf leaderboard, a benchmark of AI chips, in LLM training. Keep an eye out for an update of the State of AI Compute Index in the coming weeks. Inflection also announced that it had trained an LLM (Inflection-1) of similar size to GPT-3.5 and PaLM-540B, and that its model was “best in its compute class” on all benchmarks it was evaluated for. People will soon be able to evaluate it themselves via their conversational API.

Meanwhile, in France, weeks-old Mistral AI, founded by Chinchilla and LLaMa lead authors Arthur Mensch and Guillaume Lample respectively, raised a €105 seed round. The company says it wants to build the first serious European contender to the American leaders (take that Aleph Alpha). They want to build open source models that are much better than existing ones, which are trained on high-quality proprietary and identifiable public data to avoid copyright issues. You can read more details on their strategy here. In this context, Cohere’s $270M Series C raise almost came as a reality check. Cohere, which was founded in 2019, is now valued at $2.2B.

We also got some rumors about what DeepMind is up to. Demis Hassabis said DeepMind’s next chatbot, dubbed Gemini, will surpass ChatGPT. “At a high level you can think of Gemini as combining some of the strengths of AlphaGo-type systems with the amazing language capabilities of the large models. [...] We also have some new innovations that are going to be pretty interesting.” Meanwhile, Sam Altman said GPT-5 is still not training, and that OpenAI was working on technical innovations they need to start training it. This is another signal that data/parameter/compute scaling is reaching a (high performance) plateau and that it’s time (i) for technical innovation to shine in order to further push model performance, (ii) and for companies to figure out how to build businesses as they have a better idea of what capabilities to expect from LLMs.

The latter idea justifies why big tech companies are dedicating large funds to invest in new AI-based businesses. Here’s just a sample: AWS ($100M for generative AI), Salesforce Ventures ($500M), Workday ($250M), Accenture ($3B), and PwC ($1B). So many hammers. So few nails. No wonder valuations are skyrocketing. Here’s a chart of funds raised in generative AI from Dealroom:

Also on our radar:

lmsys.org evaluated models advertised to be good on long sequences and found that most open source LLMs actually don’t live up to their promise and see their accuracy plummet after a few thousand input tokens. In contrast, GPT-3.5 and Claude are perfect on their respective advertised maximum context lengths. They must be, as they’re actually selling a guaranteed performance. lmsys introduced a new technique, also independently discovered by Meta, to extend the context length that a model can handle.

Open source LLM tooling raise VC funding:

LlamaIndex, which builds a framework to connect users/companies’ private data with LLMs, raised an $8.5M seed round led by Greylock. The company started out as an open source project last November.

ggml, “a tensor library for machine learning to enable large models and high performance on commodity hardware”, received funding from Nat Friedman and Daniel Gross.

The AI community keeps pushing open source models:

Falcon-40B is now fully OpenSource. The initial release was criticized because their license didn’t authorize commercial use.

Together released RedPajama 7B, an instruction fine-tuned model trained on a fully 5TB open-source dataset without any outputs from other closed LLMs like OpenAI’s or Anthropic’s.

OpenLLaMa 13B, an exact reproduction of LLaMa which allows its users to use it commercially, was released.

Salesforce released Xgen, a series of 7B LLMs with up to 8K context length trained on open source datasets

Wizard coder is the latest programming language model in town. “It surpasses“Claude-Plus (+6.8), Bard (+15.3) and InstructCodeT5+ (+22.3) on HumanEval Benchmarks”

🔬Research

Faster sorting algorithms discovered using deep reinforcement learning, Google DeepMind. DeepMind is back with a model (or rather a class of models) trained purely using Deep Reinforcement Learning. The task at hand is to improve the most basic algorithms which underpin much of what companies and individuals do on computers; think of algorithms for sorting and hashing for example. Many of them are used trillions of times a day and haven’t changed in years, so you might think there would be nothing left to do. Alphadev, an RL algorithm based on AlphaZero (DeepMind’s famous chess/shogi/go playing algorithm) challenges this thought. Alphadev doesn’t train an agent to generate C++ or Python code that many of us would understand, but rather on compiled assembly code, where, for example, explicit instructions on memory management are written. This allows the algorithm to have even more room to make better optimizations. Reformulating as a reinforcement learning problem: The current state is a concatenation of a vector containing the representation of the currently generated algorithm in assembly, and a second vector with the state of memory and registers after the algorithm is run on predefined inputs. At time t, the agent receives the state and writes new instructions or deletes existing ones. It then receives a reward that depends on the algorithm’s correctness and latency, and it is trained to maximize this reward. “Winning the game corresponds to generating a correct, low-latency algorithm using assembly instructions. Losing the game corresponds to generating an incorrect algorithm or a correct but inefficient algorithm.” AlphaDev improved the speed for sorting 3, 4 or 5 items. The corresponding algorithms were then reverse-engineered from assembly to C++, and then integrated in the ubiquitous LLVM library. Interestingly, the optimizations found by Alphadev were remarkably simple. So some wondered if GPT-4 could have figured out the solution. And it seems it did for sort3! Although it’s not clear how reproducible this is or whether GPT-4 optimized the code correctly for the “right” reasons (AlphaDev specifically aims to be correct and reduce the latency), this is another instance of the broad range of problems that GPT-4 could possibly tackle. In Guide to AI April’23, we covered NVIDIA’s Voyager, which plays Minecraft by writing code using GPT-4 and without any RL. Another paper that goes in the same direction is SPRING: GPT-4 Out-performs RL Algorithms by Studying Papers and Reasoning, from CMU, NVIDIA, Ariel University, and Microsoft Research. Here, an LLM-based agent plays Crafter by using not only information about its current state, but also the LaTeX source of the academic paper behind the game. Yet another interesting paper Supervised Pretraining Can Learn In-Context Reinforcement Learning from Microsoft, Stanford, and Google DeepMind. It examines the in-context learning capabilities of decision transformers.

TinyStories: How Small Can Language Models Be and Still Speak Coherent English? and Textbooks Are All You Need, Microsoft Research. These two papers are all about efficiency. The first paper set out to discover if small language models weren’t able to generate text which is as coherent as LLMs’ because they were in some sense “overwhelmed” by the large datasets that researchers attempted to train them on. This turned the task into building a dataset that could “preserve the essential elements of natural language, such as grammar, vocabulary, facts, and reasoning, but that is much smaller and more refined in terms of its breadth and diversity.” The dataset, called TinyStories, consisted of small stories written by GPT-3.5 or GPT-4 and which were automatically curated by using GPT-4 to grade them. They showed that models as small as 28M parameters can be competitive with much larger ones like GPT2-XL (1.5B). Along the same line of research, other researchers from Microsoft generated a small dataset of well-curated code programs on which they trained a language model called phi-1. phi-1 achieved a 50.1% score on HumanEval, a challenging benchmark of code generation from docstrings. It’s the only sub-10B parameter model to achieve >50% on HumanEval. An additional benefit of the small size of these language models is that their neurons were more interpretable that those of larger ones. “We observe that both attention heads and MLP neurons have a meaningful function: Attention heads produce very clear attention patterns, with a clear separation between local and semantic heads, and MLP neurons typically activated on tokens that have a clear common role in the sentence”.

RoboCat: A Self-Improving Foundation Agent for Robotic Manipulation, Google DeepMind. A little under a year ago, DeepMind wrote a paper on Gato, a generalist agent achieving reasonable performance on a range of text, image, and robotics tasks. RoboCat, which is based on Gato, is geared towards robotics tasks. The authors aimed to determine whether they could build an agent that exhibits positive transfer for robotics tasks: i.e. such that its performance across different tasks is better than the performance of multiple agents each trained on one individual task. They consider the task of controlling a robot arm. To train RoboCat, they (1) start with 100-1000 human demonstrations of a task, (2) fine-tune a copy of RoboCat on the demonstrations of the new task, (3) the copy practices on average 10K times on the new task, generating new training data which is incorporated into RoboCat’s training dataset, (4) RoboCat is trained with this new mix of real-world, simulated, and self-generated, larger dataset. The process is then repeated on each new task. The agent is trained using different robotic arms. Surprisingly, the researchers showed that although it was trained using robotic arms with two fingers, it needed only a few hours to adapt to a three-fingered robot. More generally, they showed that, trained this way, RoboCat was able to adapt to new tasks with smaller amounts of human demonstrations. Another interesting question the paper answers is whether large visual models pre-trained on internet-scale data could outperform RoboCat when fine-tuned on robotics tasks. They test this on an eye-popping 59 open-source models and show that RoboCat beats them by a large margin. If you want to learn more about this, do yourself a favor and read Eric Jang’s blog post detailing what’s really new here and what matters for AI and robotics, including some thoughts that put works in the field into perspective like “In 2023, robotics research, mine included, continues to be largely irreproducible. It is the elephant in the room of the robotic learning community.” and “The systems involve so much complex engineering that asking two different grad students to implement the same thing will probably yield different results too. The choice of whether you bolt the board to the table or not probably has a larger effect size on performance than any of your baseline ablations, and hundreds of these choices are implicitly baked into the data distributions without the researcher being aware of it.”

Also on our radar:

Simple and Controllable Music Generation, Meta AI. Introduces the latest and most efficient SOTA text-to-music generation model called MusicGen. Samples here and demo here. Based on the CNN-based EnCodec audio tokenizer which allows reconstructing music at a high frame rate from a low frame rate discrete representation.

Image Captioners Are Scalable Vision Learners Too, Google DeepMind. They show that encoder-decoder models trained on image-caption pairs (Image Captioners) can be as good as or better than models trained using contrastive learning on batches of images and language captions for image classification.

OmniMotion: Tracking Everything Everywhere All at Once, Cornell, Google, UC Berkeley. Presents a very impressive motion tracking method that is able to track objects continuously, even through occlusions.

How Far Can Camels Go? Exploring the State of Instruction Tuning on Open Resources, Allen Institute for AI, University of Washington. Another take on the utility of publicly available (or cheaply obtainable) instruction tuning datasets (see the research section of Guide to AI April’23). “Our experiments show that different instruction-tuning datasets can uncover or enhance specific skills, while no single dataset (or combination) provides the best performance across all evaluations.”

Mind2Web: Towards a Generalist Agent for the Web, The Ohio State University. A dataset of language instructions and human action sequences for agents that can browse the web. Mind2Web contains 2,350 diverse tasks from 137 websites.

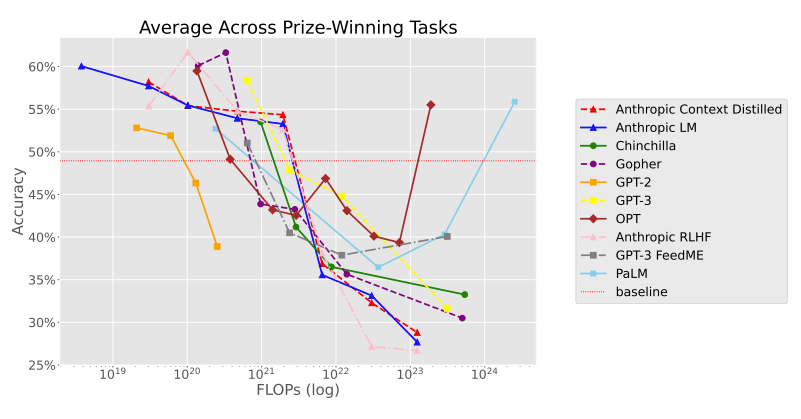

Inverse Scaling: When Bigger Isn’t Better. NYU, Anthropic and others launched a $250k prize pool competition aiming to discover tasks on which scaling language models (in size, training data and compute) resulted in a drop in performance. That would mean that the model’s objective (increasing the log-likelihood) was increasingly “misaligned” with the intended objective (solving the task at hand). The paper presents the winning tasks (See the introduction of the article for a quick summary).

StableRep: Synthetic Images from Text-to-Image Models Make Strong Visual Representation Learners, Google, MIT. Pretraining on synthetic images > training on real images. Specifically, “with solely synthetic images, the representations learned by StableRep surpass the performance of representations learned by SimCLR and CLIP using the same set of text prompts and corresponding real images, on large scale datasets. When we further add language supervision, StableRep trained with 20M synthetic images achieves better accuracy than CLIP trained with 50M real images.” But don’t get too excited. The Curse of Recursion: Training on Generated Data Makes Models Forget, from Oxford, Imperial College, UofT, Cambridge and the University of Edinburgh, showed on language generation, training on model-generated content resulted in what they call “model collapse”: “a degenerative process affecting generations of learned generative models, where generated data end up polluting the training set of the next generation of models; being trained on polluted data, they then mis-perceive reality.”.

Human or Not? A Gamified Approach to the Turing test, AI21 Labs. AI21 Labs ran the largest Turing Test to date, with 1.5M users. Users chatted with either another human or a language model, and they had to guess whether it was one or the other. The users guessed correctly in 68% of the games.

💰Startups

🚀 Funding highlight reel

This month saw its fair share of large funding deals, many of them going to foundation model builders (or as some would jokingly say, going to NVIDIA’s pockets).

As we covered in the big tech section, Inflection raised a $1.3B at $4B valuation led by Microsoft and NVIDIA.

Cohere, whose LLMs regularly rank among the very best in LLM benchmarks, raised a $270M funding round at $2.2B valuation led by Inovia Capital.

France finally has its LLM company, called Mistral. The company was founded 4 weeks ago and already raised a $105M seed round from Lightspeed Venture Partners. The founding team includes Arthur Mensch, the lead author of DeepMind’s Chinchilla, and Guillaume Lample, who led the release of LLaMa.

Reka AI, founded by LLM researchers Yi Tay (Google) and Dani Yogatama (DeepMind), raised a $58M seed round led by DST Global Partners and Radical Ventures. Reka is building “enterprise-grade state-of-the-art AI assistants for everyone regardless of language and culture.”

Runway, the generative AI video startup, raised a $141M extension to their Series C form Google and NVIDIA.

Typeface, which generates content for businesses, raised a $100M funding round led by Google and salesforce. The deal values Typeface at $1B.

Synthesia, which turns text into speech uttered by human-like avatars, seems to be relentlessly growing. It raised a $90M Series C led by Accel that values the company at $1B.

Celestial AI, a hardware company focused on designing tools that decouple memory from compute and solving the data transmission problem on chips – memory is the actual bottleneck for training many ML models, raised & $100M Series B led by IAG Capital Partners, Koch Disruptive Technologies and Temasek’s Xora Innovation fund.

Augmedics, which builds an AR platform to improve the way surgeons perform spinal surgery, raised a $82.5 million Series D led by CPMG.

Granica, which dubs itself the AI Efficiency Platform – it tracks opportunities to reduce the cost companies pay for storing and accessing data on the cloud – raised a $45M seed round led by NEA and Bain Capital Ventures.

French AI radiology company Gleamer raised a $30M Series B led by Supernova Invest and Heal Capital.

Enterprise search company Vectera raised a $29M seed round from Race Capital. The company claims it can handle search queries of arbitrary length and add and remove data from its search index at its clients’ will.

CalypsoAI, which builds tools to monitor generative AI models deployed by enterprise customers, raised a $23M Series A-1 round led by Paladin Capital Group.

Faros AI, whose ambition is to help software engineering teams better monitor their processes using AI, raised a $20 million Series A led by Lobby Capital.

Another company that came out from stealth with a big round is Contextual AI, which raised $20M in seed funding led by Bain Capital Ventures. Contextual AI aims to improve how LLMs use external tools (think of ChatGPT plugins) in order to help enterprise users.

Household name text-to-speech platform ElevenLabs raised a $19M Series A from Nat Friedman, Daniel Gross, and Andreessen Horowitz.

BentoML, which will create tools to help speed up AI-based app building, raised a $9M seed round led by DCM Ventures.

LlamaIndex, which builds a framework to connect users/companies’ private data with LLMs, raised an $8.5M seed round led by Greylock. The company started out as an open source project last November.

🤝 Exits

MosaicML was acquired by Databricks for $1.3B, inclusive of retention packages. The deal is driven by the desire of organizations to train LLMs and other large models on their own data, increasingly residing within Databricks.

Casetext, a 10-year old legal technology startup, was acquired for $650M in cash by Thomson Reuters. The company’s product does document review, legal research memos, deposition preparation, and contract analysis, powered by GPT-4, which it got early access to. This is a prime example of a SaaS company that wasn’t born AI-first but managed to strategically apply LLMs to reinvigorate its business and drive customer value. As a system of record for legal documents with a large number of firms using the software, Casetext was in a great position to be supercharged with AI.

---

Signing off,

Nathan Benaich, Othmane Sebbouh, Alex Chalmers 2 July 2023

Air Street Capital | Twitter | LinkedIn | State of AI Report | RAAIS | London.AI

Air Street Capital invests in AI-first technology and life science entrepreneurs from the very beginning of your company-building journey.

Such an insightful review. Thanks so much for sharing!

Decent summary of recent A.I. investments. Definitely complementary reading would be: https://medium.com/lightspeed-venture-partners/eight-ai-startups-winning-the-race-for-tech-talent-571a18b03642