Hi all!

Welcome to the latest issue of your guide to AI, an editorialized newsletter covering key developments in AI research, industry, geopolitics and startups during March 2023. We’ve now shot past 21,000 subscribers, thank you for your readership! Before we kick off, a couple of news items from us :-)

With LLMs emerging as a foundational building block for AI-first products, we have lots of best practices, tools and design patterns to develop as an industry.

We’re therefore hosting meetups in New York City on April 25th (register here) and San Francisco on April 27th (register here) to demo and discuss these best practices amongst peers. Similar to our London.AI and RAAIS events, we’ll host 100 engineers, researchers, product builders, designers and founders in AI with ample time to make new connections!

Register for our one-day RAAIS conference on research and applied AI 23 June 2023 in London. We’ll be hosting speakers from Meta AI, Cruise, Intercom, Genentech, Northvolt and more to come!

FYI, you might have to read this issue in full online vs. in your inbox.

🏥 Life (and) science

Bristol Myers Squibb (BMS) is continuing its deal-making with AI startups. Recall that in 2021, BMS entered into a deal with Exscientia (drug design) worth up to $1.2B, and in 2022 it partnered with Owkin (drug trials) for a payout of up $100M and an $80M equity investment. This month, BMS announced a collaboration with London-based CHARM Therapeutics “for the identification and optimisation of compounds against BMS selected targets”. CHARM claims to have built the first successful deep learning-based protein-ligand cofolding algorithm. Think of an AlphaFold-like system that is capable of predicting not only how a single protein would fold, but what both the ligand (e.g. drug) and the protein causing the disease would look like in 3D. The figures for the deal haven’t been shared, but CHARM will receive both upfront payments and an equity investment.

Also in structural biology, Gandeeva Therapeutics will partner its cryogenic electron microscopy capabilities with Moderna for a drug development program. This technique unlocks high-resolution empirical structure determination of proteins, which is critical for precision drug design. Think of it as the “real world” counterpart to AlphaFold’s “in silico” predictions of protein structure.

Exscientia announced this month that 2 of the oncology candidates that emerged from a collaboration with Celgene (acq. BMS in 2019) are heading into clinical trials. Exscientia now has 3 candidates in clinical trials and 2 others in IND-enabling studies. Interestingly, BMS could have exercised an option on these candidates itself, but didn’t. Exscientia now owns rights to both compounds.

🌎 The (geo)politics of AI

More than a thousand notable tech personalities (Musk, Wozniak) and AI experts (Yoshua Bengio, Gary Marcus, Stuart Russell, Emad Mostaque, many DeepMind researchers, etc.) signed an open letter asking for a halt on large-scale AI experiments. The letter has been derided for several reasons even from experts well aware of the AI safety implications of a rapid development of AI systems. Some thought the letter didn’t go as far as it should, calling that AI development should be shut down altogether rather than simply temporarily halted. In fact, a TIME oped by MIRI researcher Eliezer Yudkowsky even (crazily) invoked bombing GPU clusters if they don’t abide and that the default path for AGI is to destroy all of humanity. Some considered that a 6-month halt only means 6-month development in secret, especially given the financial incentives. Indeed, Andrew Ng put it well: “There is no realistic way to implement a moratorium and stop all teams from scaling up LLMs, unless governments step in. Having governments pause emerging technologies they don’t understand is anti-competitive, sets a terrible precedent, and is awful innovation policy.” Others argued that the sensationalist wording used in the letter contributes to obfuscating the actual capabilities of AI systems. They ask that the development of AI be done in more transparency and that funding allocated to developing the capabilities be coupled with funding of safety and alignment research.

Regardless of where you stand on A(G)I risks, a productive framing for why investing in AI safety and alignment work makes sense is that it is the path to developing robust and resilient software systems that don’t break or go haywire. It is also remarkable how 1-2 years ago, very few in the field or outside of it were calling attention to AI safety. In our 2022 State of AI Report, we created a section on AI Safety after noting in 2021 that fewer than 100 researchers were actively working on alignment in the major AI labs despite AI becoming a literal arms race. Back then we got a quite some heat for publishing this…have times changed.

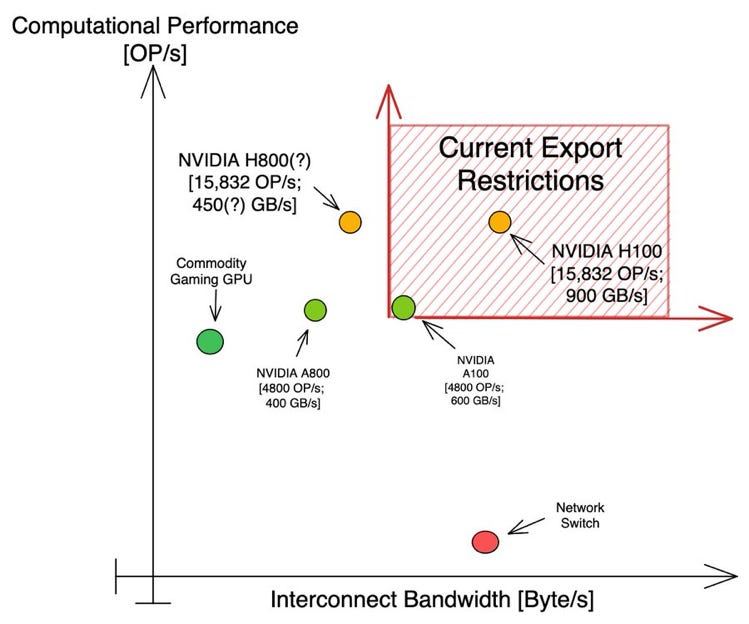

NVIDIA announced that it had developed a new H800 AI Chip, a modified version of its most recent H100 chip that doesn’t fall under US export restriction to China. This is another chip from the 800 series, following the A800, an export-compliant version of the A100 chip. While NVIDIA didn’t disclose much in terms of architecture designs in the H800, it seems that the difference with the H100 is in the data transfer rate, with the former having about half the data transfer rate of the latter. Higher data transfer rate translates to longer training time for AI models. See the schematic below for a nice visualization of the estimated differences between the state of the art chips and the 800 series (Source).

Anduril has become the flagship military AI startup, winning several government contracts and attracting significant venture capital investments. But according to this nice writeup in the WSJ, Anduril isn’t alone anymore. The US Defense Department is now more systematically luring Silicon Valley money by contracting with new startups. This is first driven by structural factors: AI is maturing and becoming less of a nebulous, dreamy, technology, while pioneering startups like Palantir and SpaceX have proven that they can challenge larger, established, but less nimble companies. There is also a conjunctural aspect: Defense is a recession-proof technology, and political tension – which is generally bad for the economy – translates into more government contracts, and in turn VC investments. Pitchbook data indeed shows that US VC deals have indeed grown from $1B in 2017 to $6B in 2022, with a dropoff of $1B from 2021 to 2022, which is a miniscule setback compared to the trend in tech investments. Still, it takes time for culture change to occur and experts interviewed in the article believe the DoD will still be inclined to stick with large primes for the upcoming future, despite evidence of lower R&D returns. A lot rests on the shoulders of military AI pioneers, if that wasn’t hard enough already.

Meanwhile, in AI funding, it’s still the US vs. the rest. An analysis of the MAD (Machine Learning, AI, and Data) landscape, a list of 3,000 startups from all around the world compiled by FirstMark, shows that funding in the broad “data” sector in the US ($34B) is more than 4 times higher than in China, the second-best, and ten times higher than the UK. Sadly, the EU seems to still be punching far below its economic weight.

The UK government is paying closer attention to AI. It published a white paper on its approach to AI regulation titled “A pro-innovation approach to AI regulation”. The general spirit of the white paper is self-explanatory: Regulation is key to building public trust in any new technology. But the fact that AI research is moving so fast (if not in terms of fundamental innovation, at least in terms of AI system’s capabilities) makes the safety/innovation line a very fine one to walk. There is little consensus among AI experts on what regulation of (for example) LLM research would look like without a complete halt on such research. But AI is not (yet) all about LLM research, and there are many AI applications (e.g. health, defense) that can already be reasonably regulated. As a quick aside, from the white paper press release: according to the UK government, AI contributes £3.7 billion to the UK economy. This white paper came two weeks after the UK government announced the creation of a new task force to work on accelerating the development of foundation models in the UK and defining the way this would benefit the UK economy and society.

Meanwhile, Italy became the first Western country to ban ChatGPT over privacy concerns. The Italian regulation agency said ChatGPT has an "absence of any legal basis that justifies the massive collection and storage of personal data" to "train" the chatbot. OpenAI has now disabled ChatGPT in Italy. This ban came just 2 days after Italy also moved to ban cultivated meat 🐮. A sad example of Europe “leading” through regulation versus innovation.

🏭 Big tech

The wait for OpenAI’s GPT-4 is over. When the “huge” 1.5B GPT-2 came out back in 2019, it was met with relative silence. GPT-3’s release in 2020 then had the research community, as well as a few AI enthusiasts, wake up to the possibilities of scaling transformers in size and training data. Fast forward 3 years, and now the release of GPT-4 is a global event perhaps on the level of a new generation of iPhones. As soon as GPT-4 came out, it was integrated into ChatGPT and put into the hands of millions of daily users while every other technology company raced to integrate the model or similar ones. And as soon as the GPT-4 API becomes available, thousands of products will get a big upgrade – they already are via ChatGPT plugins! (more on this in a second). But let’s first make sense of all that happened around GPT-4:

How does GPT-4 fundamentally differ compared with GPT-3 and its instruction-finetuned versions? The single fundamental difference that we know about is that GPT-4 is multimodal: it has been trained not only on text, but also on images. This means it can accept images as inputs to generate text. It’s still a transformer pre-trained on large publicly available and data licensed from third-party providers, and it’s fine-tuned using RLHF. Another important detail is that GPT-4 by default comes with a context length of 8,192 tokens, but OpenAI will also be providing access to a 32,768-context length version (which is huge!). The GPT-4 Technical Report “contains no further details about the architecture (including model size), hardware, training compute, dataset construction, training method, or similar”. OpenAI justifies this by “the competitive landscape and the safety implications of large-scale models”.

How good is GPT-4? The researchers did a comprehensive evaluation of GPT-4 not only on classical NLP benchmarks, but also on exams designed to evaluate humans (e.g. Bar exam, GRE, Leetcode). A quick summary is that GPT-4 is the best model across the board. It solves some tasks that GPT-3.5 was unable to, like the Uniform Bar Exam where GPT-4 scores 90% compared to 10% for GPT-3.5. On most tasks, the added vision component had only a minor impact, but it helped tremendously on others. Again, given that nothing about the training data or the models was disclosed, we have to trust that the data on which GPT-4 was trained didn’t contain the test results that it was evaluated on. OpenAI released their framework for creating and running benchmarks for evaluating LLMs. OpenAI stands to gain from this release: this gives them the chance to identify and understand potential failure cases of GPT-4, which is why it is “prioritizing API access to developers that contribute exceptional model evaluations to OpenAI Evals”.

How is GPT-4 deployed? For now, in ChatGPT, in Bing, and via restricted API access. But the release of ChatGPT plugins makes the integration of GPT-4 into ChatGPT a much bigger deal. Through plugins, ChatGPT has access to different applications (e.g. a web browser, a code interpreter, apps like Expedia or Instacart, etc.) and can answer user requests by making various API calls. For example, it can come up with a recipe and make an order on Instacart for the required ingredients. This is yet another step (deployment!) in the direction of embodied language models – we had covered the latest advancements in this domain last month when we covered Meta’s Toolformer paper. This is probably also the most safety-critical step yet, and has many in the safety community freaked out and calling this step “reckless”.

Safety: Although OpenAI’s report didn’t reveal much about GPT-4, it did explain quite a bit about their methodology for improving the model’s safety and alignment. OpenAI “engaged over 50 experts from domains such as long-term AI alignment risks, cybersecurity, biorisk, and international security to adversarially test the model”. One example is that following expert findings, OpenAI collected additional data to improve GPT-4’s ability to refuse requests on how to synthesize dangerous chemicals. An additional component of the instruction fine-tuning pipeline is the use of zero-shot GPT-4 classifiers as reward models that reward GPT-4 on refusing to answer unsafe requests. OpenAI reports that they’ve decreased the model’s tendency to respond to requests for disallowed content (Table 6 in the paper) by 82% compared to GPT-3.5.

Pricing: The performance gain (and model size surely) are reflected in pricing. “Pricing is $0.03 per 1k prompt tokens and $0.06 per 1k completion tokens”, compared to $0.002 for gpt-3.5-turbo.

In the face of relentless competition, Google is trying to deploy its own LLM-based solutions but, as expected, at a slower pace. Bard, Google’s chatbot that was announced last month, is now open to testing in the US and the UK. By most accounts, Bard is underwhelming, especially compared to ChatGPT.

The news this month felt like a repeated back and forth between OpenAI/Microsoft and their competitors. On the same day that GPT-4 was announced, Google announced an API to PaLM, their largest (540B parameters) and most capable language model. Apparently, the model that has been released is an “efficient” one in terms of size and capabilities. This means that Google isn’t releasing the best model it has yet… Other sizes will be added “soon”. Google Cloud also incorporated LLMs and text-to-image models into Vertex AI, its platform for building and deploying machine learning models. Anthropic, which is a more safety-focused AI research company, also launched its own AI chat assistant, accessible via API, called Claude.

Google is introducing new generative AI tools into Google Workspace. This includes a writing assistant for Docs and Gmail that can write entire texts or help users rewrite their text. Google announced a range of features on other Workspace Apps: image, audio and video generation on Slides, auto-completion, formula generation in Sheets, automatic notes in Meet, etc., but like larger PaLM API models, none have been released yet. Google is either being very cautious with their products until they’re comprehensively safety-tested, or they’re simply running behind, can’t execute fast enough, and still need to respond to OpenAI/Microsoft’s frenetic shipping speed. Still, Bloomberg reports that an internal directive at Google requires generative AI to be incorporated into all of its biggest products within months. So maybe this will change soon. As a response, Microsoft announced its own suit of generative AI integrations into its productivity apps with Copilot 365. These include similar text functionalities to those that Google announced, plus more integrations with Microsoft Graph data (i.e. data from calendar, email, chars, other documents), etc. Copilot reportedly can create PowerPoint documents, analyze Excel spreadsheets, and take meeting notes. It’s also offered in the form of a chat experience in Microsoft Teams called Business Chat.

The second most used AI product in the world is getting a big upgrade soon. GitHub (a Microsoft company) announced Copilot X, an extension of the previous Github Copilot (which was designed for code completion) to several new functionalities: conversations with the AI coding assistant, information retrieval from code documentation, AI-generated pull requests descriptions and automated unit tests, and AI assistance inside the terminal. We don’t have much detail about training data and the exact model behind the update, but GitHub refers to “early adoption of OpenAI’s GPT-4”. Remember that the initial Github Copilot was powered by OpenAI’s Codex, a smaller GPT model fine-tuned on GitHub code and was available to researchers. A few days after Github’s announcement, Google Cloud and Replit – which built a collaborative browser IDE – announced that they were starting a collaboration. “Under the new partnership, Replit developers will get access to Google Cloud infrastructure, services, and foundation models via Ghostwriter, Replit's software development AI, while Google Cloud and Workspace developers will get access to Replit’s collaborative code editing platform.” Ghostwriter was initially based on OpenAI models, but the usage price was too high for Replit. Through this deal, Replit is having privileged discounted access to Google’s models (which may or may not be as good as OpenAI’s). Google benefits from access to a popular IDE (though far from being as popular as VSCode) where it can showcase the capabilities of its programming language models. Some on Twitter opined that Google should be bolder and acquire Replit.

As Elon Musk promised, Twitter ended up publishing most of the code for its tweet recommendation algorithm. Twitter says this will allow third parties (e.g. ad publishers) to have a better idea of what will be shown to users. For researchers and the ML community at large, this is an opportunity to see what the core technology of a social network looks like, even though Twitter is not known to be at the forefront of recommendation algorithms innovation. From a machine learning perspective, we got some details from Twitter’s blog post: for each user, the tweets of people they follow is ranked using a logistic regression model which hasn’t been redesigned and retrained for “several years” (!). Another logistic regression is used to rank the tweets of a user’s social graph and the tweets of users liking the same tweets as them. They generate embeddings of users using a custom factorization algorithm and use a ~48M parameter neural network to “continuously trained on tweet interactions to optimize for positive engagement (e.g. Likes, Retweets, and Replies) and outputs ten labels to give each tweet a score, where each label represents the probability of an engagement.” They rank among 1,500 candidate tweets using these scores.

🍪 Hardware (kinda)

Which AI company will strike the best deal with NVIDIA to build foundation models competitive with OpenAI, Anthropic, Cohere, or Google? Turns out, it’s NVIDIA itself. In an incredibly AI-dense keynote, NVIDIA announced the NVIDIA NeMo service, part of the NVIDIA AI foundations services (more on these in a second). NeMo offers access to a range of pre-trained LLMs ranging from 8B parameters to 530B (which NVIDIA built in collaboration with Microsoft Azure). Enterprise customers can also fine-tune the models using their proprietary data using prompt learning techniques or RLHF to better align the foundation models with their use cases and prevent undesired outputs. NVIDIA is also going multimodal. As part of the NVIDIA Picasso service, customers will be able to use advanced text-to-image, text-to-video and text-to-3D “Edify” models (based on their eDiff-I paper?). An interesting turn of events is that NVIDIA will partner with Getty Images and Shutterstock, the two largest suppliers of stock images, ensuring that the models are trained on licensed data and avoiding any potential lawsuits. NVIDIA and Adobe will moreover develop other visual models together that will be integrated into Adobe products like Photoshop and others. NVIDIA is also offering access to generative and predictive biomolecular AI models through its APIs. These are mostly existing protein language models like DeepMind’s AlphaFold2 or Meta’s ESM series of models. All of this is enabled by the NVIDIA DGX Cloud platform, which gives customers access to eight NVIDIA H100 or A100 GPUs, starting at $37K per month. DGX Cloud will also be offered on cloud service providers, starting with Oracle Cloud, and soon on Microsoft Azure. Overall, Jensen’s GTC Keynote felt like a tour-de-force in AI product marketing. By controlling AI hardware (the chips) and the software that makes it run (CUDA), NVIDIA had already a privileged position in a world where AI would be a prevalent technology. By going up the stack and controlling models, it signals an ambition of becoming a one-stop shop for all enterprise’s AI needs. To get there, the models need to actually be capable, and we’re looking forward to seeing how they fare against other LLMs on the HELM benchmark.

Interestingly, specialized AI hardware maker Cerebras trained and open-sourced its own suite of LLMs, dubbed Cerebras-GPT, whose sizes range from 111M to 13B parameters. All were trained “compute-optimally” in the sense where they used the findings of DeepMind’s Chinchilla paper regarding the relation between the number of training tokens and model size. All the models are readily available (without any waiting list) on HuggingFace. For Cerebras, this constitutes an opportunity to show customers that their AI chips can be used to train modern neural networks that are set to be the industry standard for the upcoming future. It is also a way to attract researcher’s favor (weights included!), by releasing the models under a permissive Apache 2.0 license, unlike the other (kind of) open-source LLMs coming from the industry.

🎁 Bonus section!

The acceleration of AI capabilities naturally comes with AI news acceleration. To not leave you behind, here are (briefly) a few more news items that caught our eye.

TOGETHER released OpenChatKit: TOGETHER offers cloud solutions for distributed training of large AI models. They collaborated with LAION and Ontocord to build LLMs fine-tuned for chat, which they open-sourced, together with the training dataset.

AI21Labs announced Jurassic-2: The Tel-Aviv based LLM company says their latest model ranks second on the Stanford’s HELM benchmark of AI models (but it’s apparently not official yet since it doesn’t appear on the HELM website).

Midjourney is alpha-testing its V5: apparently, the image generation company cracked the hands problem (diffusion models had a tough time generating hands with correct shapes), and increased image quality overall. See examples here. Adobe (together with NVIDIA) announced Firefly, an image generation product trained on Adobe Stock photos. Creators will be able to add a “Do Not Train” tag to the photos they upload. Runway, the text-to-video generation company, announced Gen-2, the second version of their model.

LLMs, be careful of prompt injections: researchers showed that by inserting malicious instructions into web pages (that the LLMs were trained on), the LLM can end up following these instructions rather than what the user initially asked for. They tested it live on Bing and showed how it can be used to turn Bing Chat into a data pirate.

Salesforce Ventures launches $250M Generative AI Fund: It already invested in some big names like Anthropic and Cohere.

Intel co-founder and author of Moore’s law Gordon Moore died at 94. What an outstanding individual and legacy.

🔬Research

PaLM-E: An Embodied Multimodal Language Model, Google, Technische Universität Berlin. The best LLMs have a good apparent sense of the real world, often accurately answering practical questions, describing exactly how a process should be executed, etc. Some LLMs are able to carry out the tasks themselves in the digital world: they can be trained to respond to user requests by doing web searches, using a calculator to compute a quantity, or sending various API calls (as we covered in the previous edition of our newsletter). Google researchers show how real world tasks can be achieved by LMs by embodying into a robot. They combine PaLM, Google’s pretrained decoder-only 540B parameter LM with their largest Vision Transformer, ViT 22B, to create PaLM-E, an embodied LM that can handle multimodal outputs: text, images, state estimates, and other sensor modalities. As is often the case when training generalist agents, the idea here is to treat everything as a language modeling task. To do so, the authors use different encoders depending on the modality that map to the same dimension as the language embedding space. For example, for state vectors from a robot, they use an MLP with the right output size, and for images, they use ViT-22B topped with an additional affine map to make dimensions fit. Now for the robots embodiment part. For the relatively straightforward tasks of visual question answering (VQA) or scene descriptions, the model injects the textual descriptions and the visual or sensory inputs, and it outputs an answer just as a classical multimodal model would do. For the more difficult embodied planning and control tasks, the model has access to policies that can perform a set of tasks (skills). The set of tasks is assumed to be small but the model is not explicitly constrained to that set (it is learned from the training data and prompts). PaLM-E is integrated into a control-loop, where its predicted decisions are executed through the low-level policies by a robot, leading to new observations based on which PaLM-E is able to replan if necessary. The authors show that PaLM-E exhibits positive transfer and generalization: training on a range of different tasks improves the model’s performance on the individual tasks and on unseen tasks (such as new objects absent from the training data). As an aside, the model is also a decent visual-language model, as it establishes a new SOTA on OK-VQA, a VQA benchmark.

Alpaca: A Strong, Replicable Instruction-Following Model, Stanford. Last month, Meta had trained a suite of LLMs (LLaMA) trained solely on open data and which were competitive with OpenAI’s GPT-3, DeepMind’s Chinchilla, and Google’s PaLM. Shortly after, the model weights leaked, and now the research community had a fully open source model in its hands. LLaMA is a “classic” LLM in the sense that it’s only trained via self-supervision. But the best available LLMs, e.g. OpenAI’s text-davinci-003, are instruction fine-tuned. So Stanford researchers took the LLaMA 7B and fine-tuned it on 52K instructions. Normally, getting instructions would be very expensive since it involves a lot of human-written instructions. A workaround is to use another AI model to generate these instructions, as done in the self-instruct paper. This is what the researchers did, using OpenAI’s text-davinci-003. As a bonus, the system cost them <$600 to build.

LERF: Language Embedded Radiance Fields, UC Berkeley. Continuing on the trend of embedding language models into other systems, researchers from Berkeley grounded language embeddings from CLIP – a model that learns 2D visual concepts from text supervision – into NeRFs (Neural Radiance Fields) – the (mainly deep learning-based) SOTA method for generating 3D scenes from partial 2D views. The resulting model, LERF, “can extract 3D relevancy maps for a broad range of language prompts interactively in real-time, which has potential use cases in robotics, understanding vision language models, and interacting with 3D scenes.” A nice example of the use of LERF is in conjunction with ChatGPT (or virtually any other good LM) and a robot: for the task of cleaning a pour-over full of grinds on the counter of a kitchen, ChatGPT can give a sequence of tasks that require identifying a list of objects to use. LERF would identify the object locations, and the robot will perform the actions given a set of skills and the locations. This is a neat example of how combining the best of different model types can unlock new capabilities at a system level.

Google USM: Scaling Automatic Speech Recognition Beyond 100 Languages, Google. In this work, Google researchers confirm that for ASR too, large models trained on large diverse datasets are better than smaller ones specifically trained for narrow tasks. This time, the principle applies to ASR in multiple languages. They use 3 types of datasets: unpaired audio (incl. 12M hours of Youtube covering 300 languages), unpaired text (28B sentences spanning 1140 languages), and 200K+ hours of transcribed speech covering 70+ languages. The size of the labeled data is roughly 1/7-th that of OpenAI’s (open-source) Whisper model. Yet, the USM models developed in this paper achieve comparable or better performance on most benchmarks.

Scaling up GANs for image synthesis, POSTECH, Carnegie Mellon University, Adobe Research. For more than two years now, diffusion models have been the de facto standard for high quality image synthesis. But despite rapid improvements in the speed of image generation with diffusion models, Generative Adversarial Networks (GANs) are still much faster at training time. A natural question is whether using the same resources used for training diffusion models and innovations from the diffusion models research could lead to an increase in GANs image quality without sacrificing speed. In this paper, researchers find that simply scaling up state of the art GAN models leads to unstable training. They confirm that diffusion-model inspired techniques like interleaving self-attention and cross-attention with the convolutional layers improve performance. Using numerous other improvements, the authors train a 1B parameter GAN called GigaGAN on LAION2B. GigaGAN is indeed able to generate high-quality images in a fraction of the time needed for diffusion models (0.13s for a 512px image, 3.66s for a 16Mpx image). Still, the authors report that it fails to be as good as DALL-E2 and has more failure cases. But perhaps the most eye-catching part of the paper is the upsampler which can be used on top of text-to-image diffusion models (without ever seeing such images in its training set).

GPTs are GPTs: An Early Look at the Labor Market Impact Potential of Large Language Models, OpenAI, OpenResearch, University of Pennsylvania. For a change, this isn’t a paper on the capabilities of AI systems, but rather on the consequences of such capabilities on the labor market. The main thesis of the article is that Generative Pretrained Transformers (GPTs, e.g. OpenAI’s GPT family of models) are a General Purpose Technology. Per Wikipedia, these are “technologies that can affect an entire economy (usually at a national or global level)” that satisfy three criteria: improvement over time, pervasiveness throughout the economy, and the ability to spawn complementary innovations. This paper aims to provide evidence for the latter two criteria, with a focus on the impact of GPTs on the labor market. The authors find that on average, 15% of tasks within an occupation are directly exposed to GPTs, where exposure is defined as whether access to a GPT or GPT-powered system would reduce the time required for a human to perform a specific DWA or complete a task by at least 50 percent. Moreover, “80% of the U.S. workforce could have at least 10% of their work tasks affected by the introduction of GPTs”. The paper has many other more granular findings, such as which occupations are more at risk (higher-income jobs face greater exposure).

Also on our radar:

Zero-shot prediction of therapeutic use with geometric deep learning and clinician centered design, Stanford, Harvard, Icahn School of Medicine at Mount Sinai. This paper models almost 20,000 diseases and therapeutic candidates to help doctors predict which drugs might be useful for rare diseases. A step up to traditional NLP-based data mining and graph modeling.

Sparks of Artificial General Intelligence: Early experiments with GPT-4, Microsoft. Microsoft researchers had early access to GPT-4. They start from the observation that since GPT-4 might have seen data from all possible benchmarks, evaluating it requires a methodology closer to psychology, and more generally argue for a subjective evaluation of GPT-4. They test the model on multimodal and interdisciplinary composition, coding, mathematical abilities, interaction with the world, interaction with humans, and discriminative capabilities. Should be an interesting read (but we haven’t read the 155 pages in detail yet!).

Visual ChatGPT: Talking, Drawing and Editing with Visual Foundation Models, Microsoft: Using recent vision models (BLOP, Stable Diffusion, ControlNet, etc.) together with ChatGPT to make ChatGPT multimodal.

Singularity: Planet-Scale, Preemptive and Elastic Scheduling of AI Workloads, Microsoft: outlines how Microsoft is able to train large deep learning model across different AI accelerators, different datacenters in different regions, and to handle all types of parallelism (data, pipeline, model).

ChatGPT Outperforms Crowd-Workers for Text-Annotation Tasks, University of Zurich: ChatGPT outperforms crowd-workers on platforms such as MTurk on several annotation tasks while being 20 times cheaper.

💰Startups

Funding highlight reel

Adept, an Air Street Capital portfolio company founded by the creators of the transformer architecture and an ex-OpenAI VP of Engineering, raised a $350M Series B led by General Catalyst and Spark Capital, at a higher than $1B valuation. The company is building a general AI agent capable of assisting humans on any task on a computer.

Autonomous drone maker Skydio raised $230M at a $2.2B valuation. The company has been in the news lately for donating hundreds of drones to the Ukrainian military, which uses them to document damages of war and evidence of war crimes in unsafe regions. The funding round will be used to expand its California factory to 10 times its original size.

Humane, a secretive company built by top Apple designers and engineers, and “building the first AI hardware and services platform”, raised a $100M Series C from multiple investors.

Artera, which builds AI tools for cancer testing and predicting cancer therapy benefits, raised a $90M funding round led by multiple investors, including Coatue and Johnson & Johnson Innovation.

Fairmatic, a company which uses machine learning to analyze businesses’ vehicle fleet risk for insurance purposes, raised a $46 million funding round led by Battery Ventures.

Lambda, which offers GPU cloud and deep learning hardware for training AI systems, secured a $44 million Series B round led by Mercato Partners. It is one of the launch partners for NVIDIA’s H100 chip.

Perplexity AI, a company building a conversational search engine, raised a $25.6 million funding round led by New Enterprise Associates. The company was founded by an ex-OpenAI research scientist (also ex-DeepMind and Google Brain intern).

German startup Parloa, which automates contact centers call processing through conversational AI and low-code tools, raised a $21M Series A led by by EQT Ventures.

Seldon raised a $20 million Series B funding round led by Bright Pixel Capital. The startup provides a data-centric MLOps platform for deploying and managing machine learning models,

Fixie, a company founded by top Google and Shopify executives and building extensible LLMs for enterprise consumers, raised a $17M funding round from Redpoint Ventures, Madrona, Zetta Venture Partners, SignalFire, Bloomberg Beta and Kearny Jackson.

Numbers Station, a company which uses foundation models to automate data transformation and record matching, raised a $17.5 million Series A led by Madrona Venture Group. This is yet another company coming from Stanford professor Chris Ré’s lab. He has now co-founded 5 companies, including SambaNova, SnorkelAI, and Lattice (acq. Apple).

French startup Iktos, which develops artificial intelligence solutions for chemical synthesis and drug discovery, raised a €15.5 million Series A funding round co-led by M Ventures and Debiopharm Innovation Fund.

Unlearn.ai, a company which uses machine learning to create “digital twin” profiles of patients in clinical trials, raised a $15 million funding round co-led by Radical Ventures and Wittington Ventures. The company also added Mira Murati – CTO of OpenAI – to its board. The company is valued at $265M. Unlearn.ai also gained approval from the European Medical Association in Sept 2022.

Mythic, a company which develops artificial intelligence chips for edge computing, raised a $13 million funding round led by Atreides Management, DCVC and Lux Capital. The company’s new CEO said Mythic would now be focusing on the defense sector.

Protai, another AI-powered drug discovery startup, raised a $12M Seed led by Grove Ventures and Pitango HealthTech.

IPRally, which uses graph neural networks to more accurately search through huge databases of patents, raised a $10M Series A led by Endeit Capital.

DeepRender, which develops AI-based compression technology, raised a $9 million Series A funding round led by IP Group and Pentech Ventures.

Exits

ClipDrop (an Air Street Capital portfolio company), makers of a suite of AI APIs for visual creators, was acquired by open source AI model leader Stability AI. The company will continue to operate its brand and products under the Stability umbrella.

SceneBox, a company focused on data operations for machine learning, was acquired by simulation company, Applied Intuition.

WaveOne, a video compression startup founded in 2016, was acquired by Apple after raising $9M in total.

---

Signing off,

Nathan Benaich, Othmane Sebbouh, 2 April 2023

Air Street Capital | Twitter | LinkedIn | State of AI Report | RAAIS | London.AI

Air Street Capital is a venture capital firm investing in AI-first technology and life science companies. We’re an experienced team of investors and founders based in Europe and the US with a shared passion for working with entrepreneurs from the very beginning of their company-building journey.

good read, thanks for the update

An invaluable review! Thank you as always for the info.